Between 2001-5, I was volunteer sub-editor of EU's knowledgeboard on KM and emotional intelligence - in fact my world class media friends and I hosted chats on many human relationship valuation approaches including intellectual, social and emmotional capitals. (these were not exactly my choice of terms). Knowledgeboard was intended to unite all countries in EU and beyond in what we might now call active intelligence networking that youth could win-win with collaboratively. We discussed what context of risk could most scale collaboration and chose water angels. Actually this idea ran in my last 12 months at knowledgeboard and I havent been privileged to access a similar worldwide space to mediate since - but the idea of practice contexts that gain most from collaboration now seems to be taking off wherever intelligence is chnaging practices and livelihoods. Currently my favorite question to ask reasoning chats like Grok3 : what resources to track if you are either a 15 year old student or teacher concerned to doublecheck most exciting areas to practice as a 16 to 24 year old aiming to maximise good as well as livelihood potential in these. We'll use 1990s spaces of EconomistWater to probe in various language sources the wider contexts, and language foci, of 15 years' intelligence but 99 blog space will mainly linkin water collab intelligence

QUESTIONS YEAR OF 24-5

Does intelligence modeling have name for terrible error of system that doesnt work at extremes- today's example hills of city of angels dont have water pressre when winds of fire also make it impossible for planes to dump water - infamous 2008 financial example: insurnce companies had taken out chain of extreme risks that were correlated with each other as well as being actualosed by fake ratings

Tail Risk and Black Swan Events

In risk management and financial modeling, these extreme scenarios are sometimes called "tail events" or "black swan events." They represent rare but high-impact occurrences that fall outside the range of normal expectations and can have severe consequencesExamples from Recent Events

2025 Los Angeles Wildfires

The recent wildfires in Los Angeles provide a stark example of this type of system failure:- Water System Breakdown: The city's water infrastructure, designed for normal usage, failed to maintain adequate pressure in hillside areas during extreme fire conditions1622.

- Compounding Factors: High winds grounded firefighting aircraft, eliminating a crucial backup method and further straining the already overwhelmed ground-based water system525.

2008 Financial Crisis and AIG

The 2008 financial crisis, particularly the near-collapse of AIG, offers another prime example:- Correlated Risks: AIG had taken on massive amounts of risk through credit default swaps (CDSs) that were highly correlated with each other and with broader market conditions3031.

- Misrated Securities: Many of these risks were based on securities that had been improperly rated, leading to a severe underestimation of the true risk exposure3132.

- Systemic Impact: When the housing market collapsed, it triggered a chain reaction that threatened not just AIG but the entire financial system, revealing the hidden interconnectedness of these risks3334.

Modeling Challenges

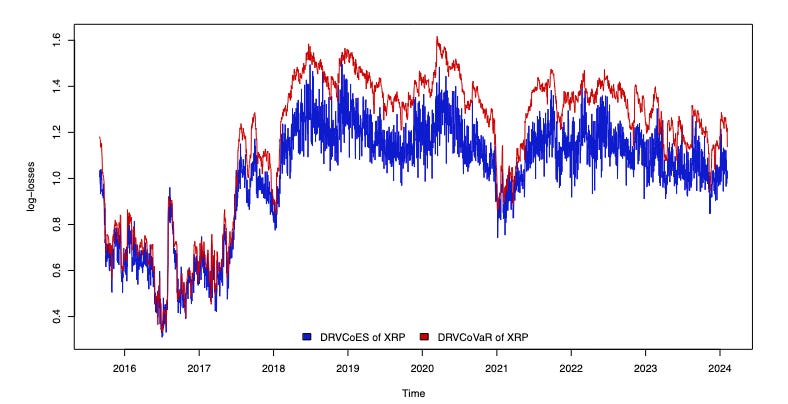

These examples highlight several key challenges in modeling extreme events:- Underestimation of Tail Risks: Traditional models often underestimate the likelihood and impact of extreme events1538.

- Correlation in Extreme Conditions: Many systems that appear independent under normal conditions can become highly correlated during crises3032.

- Cascading Failures: The failure of one system can trigger a chain reaction, leading to widespread collapse1126.

- Data Limitations: Historical data often lacks sufficient examples of extreme events, making it difficult to model them accurately836.

Addressing the Challenge

To better prepare for these extreme scenarios, researchers and policymakers are exploring several approaches:- Stress Testing: Subjecting systems to simulated extreme conditions to identify vulnerabilities1723.

- AI and Machine Learning: Using advanced algorithms to identify complex patterns and potential failure modes that might be missed by traditional models836.

- Interdisciplinary Approaches: Combining insights from multiple fields to better understand and model complex, interconnected systems2038.

- Improved Risk Communication: Ensuring that the limitations and uncertainties of models are clearly communicated to decision-makers38.

Information Asymmetry and Tail Risk Opacity

The scenario you describe, where those with access to deep data chains profit from tail risks while others suffer losses, is a form of information asymmetry. In the context of tail risks, we might call this "tail risk opacity" or "extreme event information asymmetry."This opacity can lead to:- Mispricing of risk in markets

- Concentration of losses among less-informed participants

- Potential for exploitation by those with superior information

Nuclear Energy Safety and Autonomous Monitoring

Regarding nuclear energy safety, your concerns about human fallibility in 24/7 monitoring and maintaining peace are valid. The nuclear industry has historically faced challenges related to:- Human error

- Complacency over time

- Geopolitical instability

- Continuous, tireless monitoring

- Rapid detection of anomalies

- Objective data collection and analysis

- Cybersecurity risks

- Need for human oversight and decision-making

- Potential for new types of errors or biases

Emerging Trends in Nuclear Safety

Recent developments in nuclear plant safety monitoring include:- Advanced remote monitoring technologies

- AI-powered predictive maintenance

- Drone-assisted inspections

.

.