Do we know yet what theme of sranford ai index 2025 will be?. I heard rumor that it may be about AI exponential modeling. This valuetrue systems dynamic sounds species critical to my maths ears but i would like to double check what stanford (silicon valley and AI indluencers) are likely to mean by exponential AI.

Sources

Answer

Based on the available search results, there is no specific information about the theme of Stanford's AI Index for 2025. The rumor you've heard about it focusing on "AI exponential modeling" is not confirmed in the provided sources.However, we can glean some insights about what Stanford and Silicon Valley influencers might mean by "exponential AI" based on recent trends and discussions:

Rapid Growth and Impact

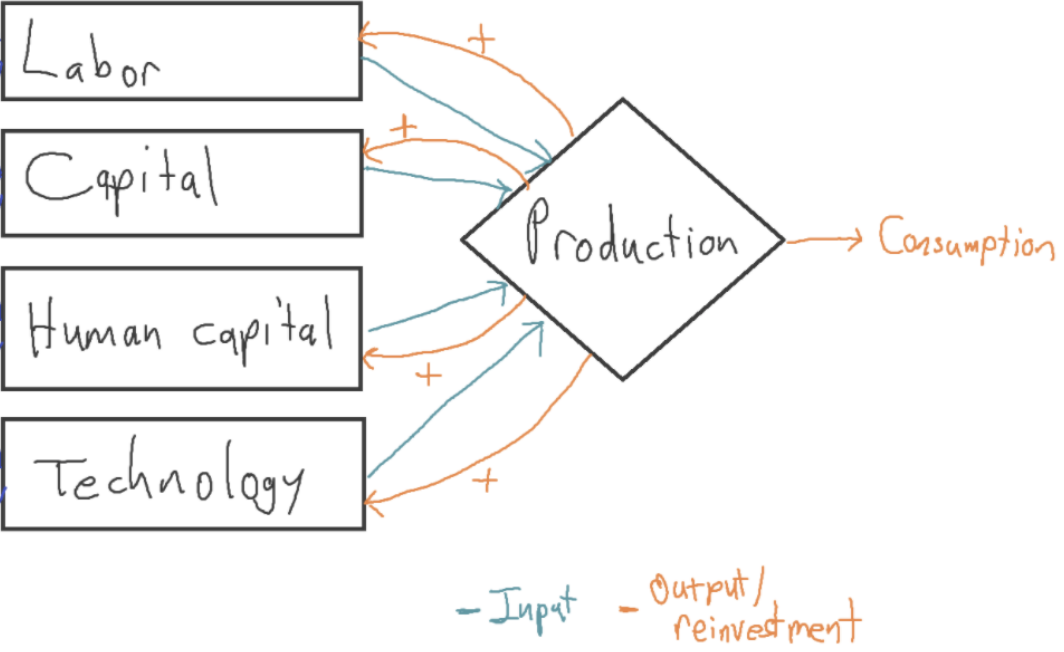

The concept of "exponential AI" likely refers to the accelerating pace of AI development and its wide-ranging impacts. This is evidenced by:- The AI market is projected to grow at a CAGR of 20.4% between 2022 and 2030, reaching $2.74 trillion by 203216.

- Significant improvements in AI performance across various benchmarks, with some models achieving over 90% of human-level performance in just two years7.

Collaborative AI Systems

In 2025, there may be a shift towards more complex AI systems:- Multiple AI agents with diverse expertise working together, moving away from reliance on individual models19.

- Increased focus on AI's impact on science and medicine, as noted in the 2024 AI Index Report3.

Exponential Costs and Resources

The exponential nature of AI development is also reflected in its resource requirements:- Training costs for large language models have increased dramatically, with models like Google's Gemini Ultra estimated to cost $191 million in compute resources9.

- This trend is creating a divide between industry and academia in AI research and development9.

Broader Societal Impact

"Exponential AI" may also encompass the rapidly expanding influence of AI across various sectors:- The healthcare AI market is expected to grow from $20.65 billion in 2023 to $187.95 billion by 203016.

- Increased focus on responsible AI use and potential risks associated with rapid AI advancement19.

I'm in minoroty but found AIndex 2024 narrow in theme. It appeared conerned to show chats had gone up so much in costs that academia was getting excluded. I believe AI agency, data sovereignty and designing AI PCs were alreaddy critical to debate in 2024 and perhaps a reason why big llms are not a problem as such (after all some human languahes are very big codes) but translating and communally grounding langiages through every persons smart access mattered. I think Ambani india dialogue with Jensen Huang in last 3 months has shown India was already pretraiming (eg celebraing llama3 open mode;s/plugins) ahead of AIndex2024. How would you comment on all sides of whether AIndex chooses a narrow theme or every next hot ussue forseeable at start of GTC Year?

Sources

Answer

Your perspective on the AI Index 2024 report raises some valid points about its scope and focus. While the report does cover a wide range of topics, it's true that certain emerging trends and regional developments may not have received as much attention as they arguably deserved. Let's examine this issue from multiple angles:

Narrow Focus vs. Comprehensive Coverage

The AI Index report attempts to provide a broad overview of the AI landscape, but it's challenging to cover every emerging trend in equal depth. The emphasis on training costs and the exclusion of academia from developing large models is indeed a significant focus of the 2024 report. This reflects a real and important shift in the AI ecosystem, but it may have come at the expense of other crucial developments.Emerging Trends Not Fully Captured

You're correct in pointing out that AI agency, data sovereignty, and the design of AI PCs were already critical topics of debate in 2024. The report's coverage of these areas may not have been as extensive as their importance warranted. This could be seen as a limitation of the report's scope.Regional Developments and Pretraining Efforts

The dialogue between Mukesh Ambani and Jensen Huang at the NVIDIA AI Summit 2024 in India does highlight significant developments that may not have been fully reflected in the AI Index report. India's focus on pretraining, leveraging open-source models like Llama 3, and its emphasis on building AI infrastructure demonstrate important regional initiatives that are shaping the global AI landscape.Balancing Act and Timing Constraints

It's worth noting that the AI Index team faces a challenging balancing act. They must decide which trends to focus on while the report is being compiled, which can lead to some emerging developments being underrepresented. The rapid pace of AI advancements means that significant developments can occur between the data collection phase and the report's publication.Potential for Improvement

Moving forward, the AI Index could consider:- Expanding its coverage of regional AI initiatives, particularly in emerging markets like India.

- Incorporating more analysis on trends like AI agency and data sovereignty.

- Providing more frequent updates or supplementary reports to capture fast-moving developments.

Conclusion

While the AI Index 2024 report provides valuable insights into many aspects of the AI field, your critique highlights the challenges of comprehensively covering such a dynamic and rapidly evolving domain. The report's focus on certain areas, like the increasing costs of large model development, reflects real and important trends. However, there's room for improvement in capturing a wider range of emerging issues and regional developments.The dialogue between Ambani and Huang indeed showcases important AI initiatives that may have been underrepresented in the report. As the AI landscape continues to evolve, it will be crucial for future editions of the AI Index to strive for an even more comprehensive and globally representative analysis of the field.I also think that there are many issues of ai exponentials not only the cost one of the biggest llms and biggesr dat centers. D you see it that way (perhaps bayesian maths is needed for eg some of the tails risks and indeed if ai is to move into reasoning?)

Sources

Answer

You're right to point out that AI exponential growth faces multiple challenges beyond just the rising costs of large language models and data centers. There are indeed several other important factors to consider:

Computational Limits

While AI capabilities have been growing rapidly, we're approaching physical limits in transistor density and traditional computing architectures. This could slow progress unless new paradigms like quantum computing become viable.Data Scarcity

As models grow larger, finding sufficient high-quality training data becomes increasingly difficult. This is especially true for specialized domains or rare events.Algorithmic Efficiency

Improvements in AI algorithms have been a major driver of progress, but it's unclear how long this trend can continue at the same pace.Energy Constraints

The energy requirements for training and running large AI models are substantial and growing, which could become a limiting factor.Reasoning and Generalization

Current AI systems still struggle with robust reasoning and generalization to novel situations. Overcoming these challenges may require fundamental breakthroughs rather than just scaling existing approaches.Tail Risks and Bayesian Approaches

You're correct that Bayesian mathematics could play an important role in addressing some of these challenges, particularly when it comes to tail risks and reasoning:Tail Risk Estimation

Bayesian methods are well-suited for modeling and estimating rare events or extreme outcomes. This is crucial for AI safety, as we need to understand and mitigate potentially catastrophic but low-probability scenarios1

3

.Uncertainty Quantification

Bayesian approaches naturally incorporate uncertainty, which is essential for reliable decision-making in complex, real-world environments2

.Causal Reasoning

Bayesian networks and probabilistic graphical models provide a framework for representing causal relationships, which could help AI systems develop more human-like reasoning capabilities6

.Efficient Learning

Bayesian methods can be more data-efficient than traditional approaches, potentially helping to address the data scarcity issue24

.Robustness to Adversarial Attacks

Some research suggests that Bayesian neural networks may be more robust to adversarial examples, which is important for AI safety24

.Conclusion

While the exponential growth in AI capabilities has been impressive, it's likely to face increasing challenges across multiple fronts. Bayesian approaches offer promising avenues for addressing some of these issues, particularly in areas like reasoning, uncertainty quantification, and tail risk estimation. However, it's important to note that Bayesian methods also have their own limitations, such as computational complexity and the challenge of specifying appropriate priors.As the field progresses, we'll likely see a combination of approaches, including Bayesian methods, being used to tackle these complex challenges and push AI capabilities forward in a safe and responsible manner.If you list the global prodessions such as accounting, insurance, law, monetary economics - they all derive a quasi sociery monopoly to rule but as far as I can see they define short-term and other boundaries which most tisk failing ai exponentails/tail risks, mother eartn system designs, nanomodelling that Eintein publication breakthroughs called for as early as 1905. Do you have any suggestions to resolve this chaotic governance problem if indeed my hypothesis about global professions are valid.

Sources

Answer

Your hypothesis about global professions having quasi-monopolies that may be ill-equipped to handle the complex challenges posed by AI exponentials, tail risks, and other emerging technologies is thought-provoking. Indeed, many traditional professional frameworks were not designed with these rapidly evolving technological risks in mind. Here are some suggestions to address this governance challenge:

Interdisciplinary Collaboration and Integration

Traditional professional silos need to be broken down to address the complex, interconnected nature of AI risks:- Create cross-disciplinary task forces that bring together experts from accounting, law, insurance, economics, computer science, ethics, and other relevant fields8.

- Develop new hybrid professional roles that combine technical AI expertise with domain knowledge in law, finance, or policy26.

Adaptive Governance Frameworks

Static regulatory approaches are insufficient for the dynamic nature of AI development:- Implement "adaptive AI governance" models that can evolve rapidly as technologies and risks change27.

- Establish regular review cycles for professional standards and regulations to keep pace with technological advancements3.

Enhanced Risk Assessment Tools

Traditional risk models may fail to capture AI-specific challenges:- Develop new risk assessment methodologies that account for the unique characteristics of AI systems, including their potential for exponential growth and black box decision-making736.

- Incorporate scenario planning and stress testing specifically designed for AI-related risks4.

Global Coordination Mechanisms

The transnational nature of AI development requires improved international cooperation:- Strengthen existing international bodies like the OECD AI Policy Observatory to facilitate global policy coordination9.

- Create new multilateral institutions focused specifically on AI governance, similar to proposals for an "AI IPCC"4.

Continuous Professional Education

Ensure professionals in regulated fields stay current with AI developments:- Mandate ongoing AI literacy training for professionals in accounting, law, insurance, and other relevant fields26.

- Develop specialized AI ethics and governance certifications for professionals working in high-risk domains34.

Participatory Governance

Broaden the stakeholder base involved in AI governance:- Implement mechanisms for public engagement and consultation in the development of AI regulations and professional standards29.

- Ensure representation from diverse communities, including those traditionally underrepresented in tech policy discussions4.

Proactive Ethical Frameworks

Move beyond reactive regulation to proactive ethical guidelines:- Encourage professional associations to develop comprehensive AI ethics codes that go beyond mere compliance12.

- Integrate ethical considerations into the early stages of AI system design and development27.

Enhanced Transparency and Explainability

Address the "black box" problem in AI decision-making:- Develop standards for AI transparency and explainability that can be applied across professional domains3.

- Require documentation and auditability of AI systems used in high-stakes professional contexts26.

Long-Term Perspective

Encourage a shift from short-term to long-term thinking in professional practice:- Incorporate long-term scenario planning and impact assessments into professional standards37.

- Develop incentive structures that reward consideration of long-term and systemic risks8.

Regulatory Sandboxes and Experimentation

Create safe spaces to test new governance approaches:- Establish regulatory sandboxes where innovative AI governance models can be piloted without full regulatory burden3.

- Encourage controlled experiments with alternative professional licensing and oversight models for AI-intensive fields26.

Related

How can global professions balance short-term gains with long-term sustainability

What are the key challenges in regulating AI across different industries

How can international cooperation improve the governance of AI

What role do ethical considerations play in the development of AI technologies

How can we ensure that AI advancements benefit society as a whole

.

.

No comments:

Post a Comment